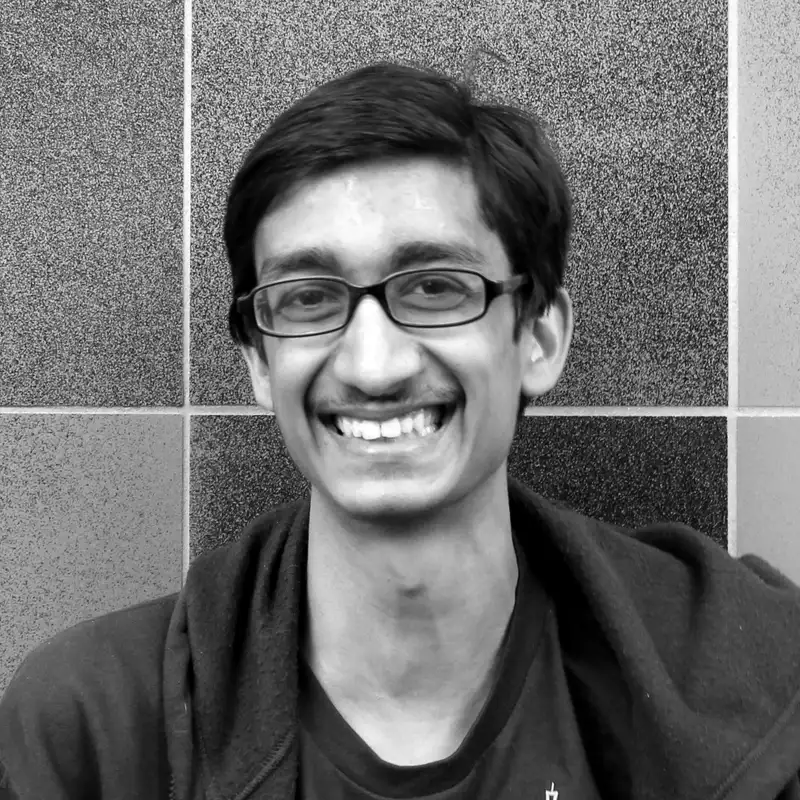

Rohin Shah

DeepMind Research Scientist Dr. Rohin Shah on Value Alignment, Learning from Human feedback, Assistance paradigm, the BASALT MineRL competition, his Alignment Newsletter, and more!

Dr. Rohin Shah is a Research Scientist at DeepMind, and the editor and main contributor of the Alignment Newsletter.

Featured References

The MineRL BASALT Competition on Learning from Human Feedback

Rohin Shah, Cody Wild, Steven H. Wang, Neel Alex, Brandon Houghton, William Guss, Sharada Mohanty, Anssi Kanervisto, Stephanie Milani, Nicholay Topin, Pieter Abbeel, Stuart Russell, Anca Dragan

Preferences Implicit in the State of the World

Rohin Shah, Dmitrii Krasheninnikov, Jordan Alexander, Pieter Abbeel, Anca Dragan

Benefits of Assistance over Reward Learning

Rohin Shah, Pedro Freire, Neel Alex, Rachel Freedman, Dmitrii Krasheninnikov, Lawrence Chan, Michael D Dennis, Pieter Abbeel, Anca Dragan, Stuart Russell

On the Utility of Learning about Humans for Human-AI Coordination

Micah Carroll, Rohin Shah, Mark K. Ho, Thomas L. Griffiths, Sanjit A. Seshia, Pieter Abbeel, Anca Dragan

Evaluating the Robustness of Collaborative Agents

Paul Knott, Micah Carroll, Sam Devlin, Kamil Ciosek, Katja Hofmann, A. D. Dragan, Rohin Shah

Additional References

Featured References

The MineRL BASALT Competition on Learning from Human Feedback

Rohin Shah, Cody Wild, Steven H. Wang, Neel Alex, Brandon Houghton, William Guss, Sharada Mohanty, Anssi Kanervisto, Stephanie Milani, Nicholay Topin, Pieter Abbeel, Stuart Russell, Anca Dragan

Preferences Implicit in the State of the World

Rohin Shah, Dmitrii Krasheninnikov, Jordan Alexander, Pieter Abbeel, Anca Dragan

Benefits of Assistance over Reward Learning

Rohin Shah, Pedro Freire, Neel Alex, Rachel Freedman, Dmitrii Krasheninnikov, Lawrence Chan, Michael D Dennis, Pieter Abbeel, Anca Dragan, Stuart Russell

On the Utility of Learning about Humans for Human-AI Coordination

Micah Carroll, Rohin Shah, Mark K. Ho, Thomas L. Griffiths, Sanjit A. Seshia, Pieter Abbeel, Anca Dragan

Evaluating the Robustness of Collaborative Agents

Paul Knott, Micah Carroll, Sam Devlin, Kamil Ciosek, Katja Hofmann, A. D. Dragan, Rohin Shah

Additional References

- AGI Safety Fundamentals, EA Cambridge

Creators and Guests

Host

Robin Ranjit Singh Chauhan

🌱 Head of Eng @AgFunder 🧠 AI:Reinforcement Learning/ML/DL/NLP🎙️Host @TalkRLPodcast 💳 ex-@Microsoft ecomm PgmMgr 🤖 @UWaterloo CompEng 🇨🇦 🇮🇳